To solve this problem I went about modifying a model given by an Esri training model on identifying closed depression where cloudburst flooding might pool up and cause infrastructure damage. I decided to try to use this model to find sinkholes here in Alabama, but this model could be used elsewhere.

For this project I focused on Morgan County, Alabama because it has some of the highest numbers of sinkholes for any county in Alabama. I got a shapefile from the Alabama Geological Survey of all the recorded sinkholes in Alabama and clipped that to the shape of Morgan County. This was my control to compare my model to.

|

| Morgan County in red. |

|

| Digital elevation model of Morgan County, Alabama. Elevation is in meters. |

To begin, you first need to get a digital elevation model (DEM) of the area you are working in. A 10-meter is probably going to be the easiest to come by, but the resolution isn't the greatest. If you can get 5, 3, or 1-meter DEMs you are going to get much better data. I managed to get a 1-meter DEM for a small section of Morgan county that had a large cluster of sinkholes.

The National Map is one of the best places to get elevation data. I got whole county 10-meter DEMs from Alabama View. 1-meter DEMs can sometimes be found here, but if you select a whole county the file size will be too large, and they won't let you download more than 8 Gb for some reason. So, select your study area using order by interactive map.

|

| This was my final model and as you can see it has my own inputs still in it. |

The basic workflow is as follows:

1. Run a fill on the DEM with no Z-limit set

2. Run the fill again on the original DEM with a Z-limit of about 1 meter. This may take some tweaking for find what works best. The smaller the Z-limit the longer it will take to run. My final run I used a Z-limit of 0.5 (meters).

3. Run the minus tool on the two DEMS. I named the DEM with no Z-limit 'all_sinks' and the 0.5 meter Z-limit one 'small_sinks'. You want to subtract the 'small_sinks' from 'all_sinks'.

4. Give the output of the Minus to the Con tool. You can run Con by itself and give it the appropriate raster, Value > 1 for the expression, and 1 for input true raster. Alternatively you could use the Raster Calculator and feed it Con("<sinks_minus_output>", 0, 1, "Value > 1"). Either way you do it 1 should be all the sinkholes found from subtracting the two rasters, and zero everything else.

5. The conditional raster output from the Con tool is then fed into the Region Group tool.

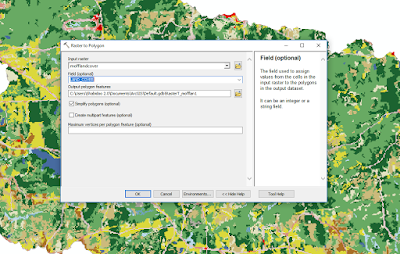

6. Run Raster to Polygon on the Region Group output. This will turn each area on the raster with a value > 1 into its own polygon feature. Now we will be able to select them individually, find perimeters, or calculate area.

7. Give that output to the dissolve tool. (optional)

8. Lastly we can feed the dissolve data into the Make Feature layer. (optional) I'm not so sure these last two steps were absolutely necessary, but Esri had them in their training model. I would probably skip these to save time.

Above I showed the model I built in the Model Builder. You can do this if you want the model to just run the entire thing on its own. I found that this can be finicky and may crash for no real reason. A second option is to make the model, validate it, then export it as a Python script. You can do this in the Model Builder by going to Model -> Export -> To Python Script. This will convert your model into, well, a Python Script. Then you can either run it stand alone, or drop the script into a new model as you would a tool, and run the model with just the script. I found this was less prone to weird cashes and errors. For a look at my model as a Python script take a look here.

My study area was an area of northern Morgan county I selected which had a large cluster of sinkholes. There were 195 sinkholes that were recorded by the Alabama Geological Survey as of 2011 in this area. The model managed to identify 152 of the 195 or 78%. However, the model also drew 3313 features. That means 3161 were false positives. Many of the false positives were ditches, roads, streams, etc. Most of these were just noise, which is what Fill is supposed to fill in.

Of all the true sinkholes the smallest had an area of 694 meters^2. The smallest perimeter was 115 meters. I decided to remove all features smaller than 115 meters perimeter. This cut all the features down to 601. Many of the drawn features actually contained multiple sinkholes, 50 in total.

This model is far from perfect, but with more data, time and tweaking it could be much better. Doctor and Young (2013) did a similar but much more refined process for finding sinkholes in northern Virginia for the USGS.